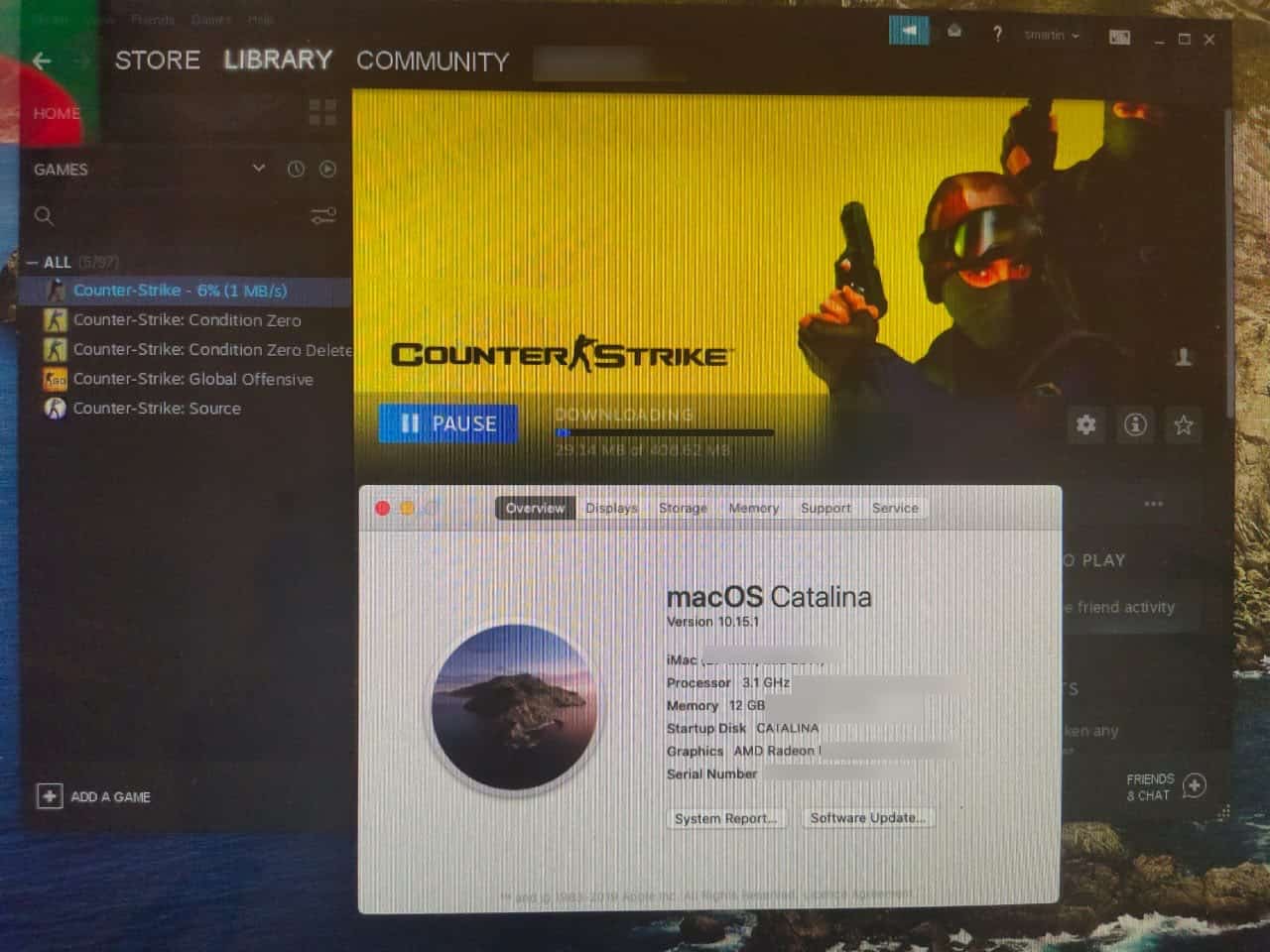

Feb 17, 2016 Mac OS X calculates this score based on CPU, disk, and network usage, among other factors. The higher the number, the more impact the application has on your battery life. By default, the list is sorted by each app's current 'Energy Impact'–that is, how much impact the application is having on your battery life right now. Apple Mac mini 'Core 2 Duo' 2.53 (Late 2009) Specs. Identifiers: Late 2009 - MC239LL/A - Macmini3,1 - A1283 - 2336 All Mac mini Models All 2009 Models Dynamically Compare This Mac to Others. Distribute This Page: Bookmark & Share Download: PDF Manual The Apple Mac mini 'Core 2 Duo' 2.53 (Late 2009) features a 2.53 GHz Intel 'Core 2 Duo' (P8700) processor, a 3 MB on-chip level 2 cache, a. Mac: Roblox Client will install on 10.7 (Lion) and higher whereas the Roblox Studio will install on Mac OS 10.11 (El Capitan) and above. Linux: Roblox is not supported on Linux. Mobile: Click here for system requirements for Roblox Mobile.

On May 6, 2002, Steve Jobs opened WWDC with a funeral for Classic Mac OS:

Yesterday, 18 years later, OS X finally reached its own end of the road: the next version of macOS is not 10.16, but 11.0.

There was no funeral.

The OS X Family Tree

OS X has one of the most fascinating family trees in technology; to understand its significance requires understanding each of its forebearers.

Unix: Unix does refer to a specific operating system that originated in AT&T's Bell Labs (the copyrights of which are owned by Novell), but thanks to a settlement with the U.S. government (that was widely criticized for going easy on the telecoms giant), Unix was widely-licensed to universities in particular. One of the most popular variants that resulted was the Berkeley Software Distribution (BSD), developed at the University of California, Berkeley.

What all of the variations of Unix had in common was the Unix Philosophy; the Bell System Technical Journal explained in 1978:

A number of maxims have gained currency among the builders and users of the Unix system to explain and promote its characteristic style:

- Make each program do one thing well. To do a new job, build afresh rather than complicate old programs by adding new 'features'.

- Expect the output of every program to become the input to another, as yet unknown, program. Don't clutter output with extraneous information. Avoid stringently columnar or binary input formats. Don't insist on interactive input.

- Design and build software, even operating systems, to be tried early, ideally within weeks. Don't hesitate to throw away the clumsy parts and rebuild them.

- Use tools in preference to unskilled help to lighten a programming task, even if you have to detour to build the tools and expect to throw some of them out after you've finished using them.

[…]

The Unix operating system, the C programming language, and the many tools and techniques developed in this environment are finding extensive use within the Bell System and at universities, government laboratories, and other commercial installations. The style of computing encouraged by this environment is influencing a new generation of programmers and system designers. This, perhaps, is the most exciting part of the Unix story, for the increased productivity fostered by a friendly environment and quality tools is essential to meet every-increasing demands for software.

Today you can still run nearly any Unix program on macOS, but particularly with some of the security changes made in Catalina, you are liable to run into permissions issues, particularly when it comes to seamlessly linking programs together.

Mach: Mach was a microkernel developed at Carnegie Mellon University; the concept of a microkernel is to run the smallest amount of software necessary for the core functionality of an operating system in the most privileged mode, and put all other functionality into less privileged modes. OS X doesn't have a true microkernel — the BSD subsystem runs in the same privileged mode, for performance reasons — but the modular structure of a microkernel-type design makes it easier to port to different processor architectures, or remove operating system functionality that is not needed for different types of devices (there is, of course, lots of other work that goes into a porting a modern operating system; this is a dramatic simplification).

More generally, the spirit of a microkernel — a small centralized piece of software passing messages between different components — is how modern computers, particularly mobile devices, are architected: multiple specialized chips doing discrete tasks under the direction of an operating system organizing it all.

Xerox: The story of Steve Jobs' visiting Xerox is as mistaken as it is well-known; the Xerox Alto and its groundbreaking mouse-driven graphical user interface was well-known around Silicon Valley, thanks to the thousands of demos the Palo Alto Research Center (PARC) did and the papers it had published. PARC's problem is that Xerox cared more about making money from copy machines than in figuring out how to bring the Alto to market.

That doesn't change just how much of an inspiration the Alto was to Jobs in particular: after the visit he pushed the Lisa computer to have a graphical user interface, and it was why he took over the Macintosh project, determined to make an inexpensive computer that was far easier to use than anything that had come before it.

Apple: The Macintosh was not the first Apple computer: that was the Apple I, and then the iconic Apple II. What made the Apple II unique was its explicit focus on consumers, not businesses; interestingly, what made the Apple II successful was VisiCalc, the first spreadsheet application, which is to say that the Apple II sold primarily to businesses. Still, the truth is that Apple has been a consumer company from the very beginning.

This is why the Mac is best thought of as the child of Apple and Xerox: Apple understood consumers and wanted to sell products to them, and Xerox provided the inspiration for what those products should look like.

It was NeXTSTEP, meanwhile, that was the child of Unix and Mach: an extremely modular design, from its own architecture to its focus on object-oriented programming and its inclusion of different 'kits' that were easy to fit together to create new programs.

And so we arrive at OS X, the child of the classic Macintosh OS and NeXTSTEP. The best way to think about OS X is that it took the consumer focus and interface paradigms of the Macintosh and layered them on top of NeXTSTEP's technology. In other words, the Unix side of the family was the defining feature of OS X.

Return of the Mac

In 2005 Paul Graham wrote an essay entitled Return of the Mac explaining why it was that developers were returning to Apple for the first time since the 1980s:

All the best hackers I know are gradually switching to Macs. My friend Robert said his whole research group at MIT recently bought themselves Powerbooks. These guys are not the graphic designers and grandmas who were buying Macs at Apple's low point in the mid 1990s. They're about as hardcore OS hackers as you can get.

The reason, of course, is OS X. Powerbooks are beautifully designed and run FreeBSD. What more do you need to know?

https://downkfil664.weebly.com/blog/chess-has-exciting-spectacular-strategy-mac-os. Graham argued that hackers were a leading indicator, which is why he advised his dad to buy Apple stock:

If you want to know what ordinary people will be doing with computers in ten years, just walk around the CS department at a good university. Pink hibiscus mac os. Whatever they're doing, you'll be doing.

In the matter of 'platforms' this tendency is even more pronounced, because novel software originates with great hackers, and they tend to write it first for whatever computer they personally use. And software sells hardware. Many if not most of the initial sales of the Apple II came from people who bought one to run VisiCalc. And why did Bricklin and Frankston write VisiCalc for the Apple II? Because they personally liked it. They could have chosen any machine to make into a star.

If you want to attract hackers to write software that will sell your hardware, you have to make it something that they themselves use. It's not enough to make it 'open.' It has to be open and good. And open and good is what Macs are again, finally.

What is interesting is that Graham's stock call could not have been more prescient: Apple's stock closed at $5.15 on March 31, 2005, and $358.87 yesterday;1 the primary driver of that increase, though, was not the Mac, but rather the iPhone. Different slot machines.

The iOS Sibling

If one were to add iOS to the family tree I illustrated above, most would put it under Mac OS X; I think, though, iOS is best understood as another child of Classic Mac and NeXT, but this time the resemblance is to the Apple side of the family. Or to put it another way, while the Mac was the perfect machine for 'hackers', to use Graham's term, the iPhone was one of the purest expressions of Apple's focus on consumers.

The iPhone, as Steve Jobs declared at its unveiling in 2007, runs OS X, but it was certainly not Mac OS X: it ran the same XNU kernel, and most of the same subsystem (with some new additions to support things like cellular capability), but it had a completely new interface. That interface, notably, did not include a terminal; you could not run arbitrary Unix programs.2 That new interface, though, was far more accessible to regular users.

What is more notable is that the iPhone gave up parts of the Unix Philosophy as well: applications all ran in individual sandboxes, which meant that they could not access the data of other applications or of the operating system. This was great for security, and is the primary reason why iOS doesn't suffer from malware and apps that drag the entire system into a morass, but one certainly couldn't 'expect the output of every program to become the input to another'; until sharing extensions were added in iOS 8 programs couldn't share data with each other at all, and even now it is tightly regulated.

At the same time, the App Store made principle one — 'make each program do one thing well' — accessible to normal consumers. Whatever possible use case you could imagine for a computer that was always with you, well, 'There's an App for That':

Consumers didn't care that these apps couldn't talk to each other: they were simply happy they existed, and that they could download as many as they wanted without worrying about bad things happening to their phone — or to them. While sandboxing protected the operating system, the fact that every app was reviewed by Apple weeded out apps that didn't work, or worse, tried to scam end users.

This ended up being good for developers, at least from a business point-of-view: sure, the degree to which the iPhone was locked down grated on many, but Apple's approach created millions of new customers that never existed for the Mac; the fact it was closed and good was a benefit for everyone.

macOS 11.0

What is striking about macOS 11.0 is the degree to which is feels more like a son of iOS than the sibling that Mac OS X was:

- macOS 11.0 runs on ARM, just like iOS; in fact the Developer Transition Kit that Apple is making available to developers has the same A12Z chip as the iPad Pro.

- macOS 11.0 has a user interface overhaul that not only appears to be heavily inspired by iOS, but also seems geared for touch.

- macOS 11.0 attempts to acquire developers not primarily by being open and good, but by being easy and good enough.

The seeds for this last point were planted last year with Catalyst, which made it easier to port iPad apps to the Mac; with macOS 11.0, at least the version which will run on ARM, Apple isn't even requiring a recompile: iOS apps will simply run on macOS 11.0, and they will be in the Mac App Store by default (developers can opt-out).

In this way Apple is using their most powerful point of leverage — all of those iPhone consumers, which compel developers to build apps for the iPhone, Apple's rules notwithstanding — to address what the company perceives as a weakness: the paucity of apps in the Mac App Store.

Is the lack of Mac App Store apps really a weakness, though? When I consider the apps that I use regularly on the Mac, a huge number of them are not available in the Mac App Store, not because the developers are protesting Apple's 30% cut of sales, but simply because they would not work given the limitations Apple puts on apps in the Mac App Store.

The primary limitation, notably, is the same sandboxing technology that made iOS so trustworthy; that trustworthiness has always come with a cost, which is the ability to build tools that do things that 'lighten a task', to use the words from the Unix Philosophy, even if the means to do so opens the door to more nefarious ends.

Fortunately macOS 11.0 preserves its NeXTSTEP heritage: non-Mac App Store apps are still allowed, for better (new use cases constrained only by imagination and permissions dialogs) and worse (access to other apps and your files). What is notable is that this was even a concern: Apple's recent moves on iOS, particularly around requiring in-app purchase for SaaS apps, feel like a drift towards Xerox, a company that was so obsessed with making money it ignored that it was giving demos of the future to its competitors; one wondered if the obsession would filter down to the Mac.

For now the answer is no, and that is a reason for optimism: an open platform on top of the tremendous hardware innovation being driven by the iPhone sounds amazing. Moreover, one can argue (hope?) it is a more reliable driver of future growth than squeezing every last penny out of the greenfield created by the iPhone. At a minimum, leaving open the possibility of entirely new things leaves far more future optionality than drawing the strings every more tightly as on iOS. OS X's legacy lives, for now.

I wrote a follow-up to this article in this Daily Update.

- Yes, this incorporates Apple's 7:1 stock split [↩]

- Unless you jailbroke your phone [↩]

Related

We've made every attempt to make this as straightforward as possible, but there's a lot more ground to cover here than in the first part of the guide. If you find glaring errors or have suggestions to make the process easier, let us know on our discord.

With that out of the way, let's talk about what you need to get 3D acceleration up and running:

If you've arrived here without context, check out part one of the guide here.

General

- A Desktop. The vast majority of laptops are completely incompatible with passthrough on Mac OS.

- A working install from part 1 of this guide, set up to use virt-manager

- A motherboard that supports IOMMU (most AMD chipsets since 990FX, most mainstream and HEDT chipsets on Intel since Sandy Bridge)

- A CPU that fully supports virtualization extensions (most modern CPUs barring the odd exception, like the i7-4770K and i5-4670K. Haswell refresh K chips e.g. ‘4X90K' work fine.)

- 2 GPUs with different PCI device IDs. One of them can be an integrated GPU. Usually this means just 2 different models of GPU, but there are some exceptions, like the AMD HD 7970/R9 280X or the R9 290X and 390X. You can check here (or here for AMD) to confirm you have 2 different device IDs. You can work around this problem if you already have 2 of the same GPU, but it isn't ideal. If you plan on passing multiple USB controllers or NVMe devices it may also be necessary to check those with a tool like

lspci. - The guest GPU also needs to support UEFI boot. Check here to see if your model does.

- Recent versions of Qemu (3.0-4.0) and Libvirt.

AMD CPUs

- The most recent mainline linux kernel (all platforms)

- Bios prior to AGESA 0.0.7.2 or a patched kernel with the workaround applied (Ryzen)

- Most recent available bios (ThreadRipper)

- GPU isolation fixes applied, e.g. CSM toggle and/or efifb:off (Ryzen)

- ACS patch (lower end chipsets or highly populated pcie slots)

- A second discrete GPU (most AMD CPUs do not ship with an igpu)

Intel CPUs

- ACS patch (only needed if you have many expansion cards installed in most cases. Mainstream and budget chipsets only, HEDT unaffected.)

- A second discrete GPU (HEDT and F-sku CPUs only)

Nvidia GPUs

- A 700 Series Card. High Sierra works up to 10 series cards, but Mojave ends support for 9, 10, 20 and all future Nvidia GPUs. Cards older than the 700 series may not have UEFI support, making them incompatible.

- A google search to make sure your card is compatible with Mac OS on Macs/hackintoshes without patching or flashing.

AMD GPUs

- A UEFI compatible Card. AMD's refresh cycle makes this a bit more complicated to work out, but generally pitcairn chips and newer work fine — check your card's bios for 'UEFI Support' on techpowerup to confirm.

- A card without the Reset Bug (Anything older than Hawaii is bug free but it's a total crap-shoot on any newer card. 300 series cards may also have Mac OS specific compatibility issues. Vega and Fiji seem especially susceptible)

- A google search to make sure your card is compatible with Mac OS on Macs/hackintoshes without patching or flashing.

Getting Started with VFIO-PCI

Provided you have hardware that supports this process, it should be relatively straightforward.

First, you want to enable virtualization extensions and IOMMU in your uefi. The exact name and locations varies by vendor and motherboard. These features are usually titled something like 'virtualization support' 'VT-x' or 'SVM' — IOMMU is usually labelled 'VT-d' or 'AMD-Vi' if not just 'IOMMU support.'

Once you've enabled these features, you need to tell Linux to use them, as well as what PCI devices to reserve for your vm. The best way of going about this is changing your kernel commandline boot options, which you do by editing your bootloader's configuration files. We'll be covering Grub 2 here because it's the most common. Systemd-boot distributions like Pop!OS will have to do things differently.

run lspci -nnk | grep 'VGA|Audio' — this will output a list of installed devices relevant to your GPU. You can also just run lspci -nnk to get all attached devices, in case you want to pass through something else, like an NVMe drive or a usb controller. Look for the device ids for each device you intend to pass through, for example, my GTX 1070 is listed as [10de:1b81] and [10de:10f0] for the HDMI audio. You need to use every device ID associated with your device, and most GPUs have both an audio controller and VGA. Some cards, in particular VR-ready nvidia GPUs and the new 20 series GPUs will have more devices you'll need to pass, so refer to the full output to make sure you got all of them.

If two devices you intend to pass through have the same ID, you will have to use a workaround to make them functional. Check the troubleshooting section for more information.

Once you have the IDs of all the devices you intend to pass through taken down, it's time to edit your grub config:

It should look something like this:

In the line GRUB_CMDLINE_LINUX= add these arguments, separated by spaces: intel_iommu=on OR amd_iommu=on, iommu=pt and vfio-pci.ids= followed by the device IDs you want to use on the VM separated by commas. For example, if I wanted to pass through my GTX 1070, I'd add vfio-pci.ids=10de:1b81,10de:10f0. Save and exit your editor.

Run grub-mkconfig -o /boot/grub/grub.cfg. This path may be different for you on different distros, so make sure to check that this is the location of your grub.cfg prior to running this and change it as necessary. The tool to do this may also be different on certain distributions, e.g. update-grub.

Reboot.

Verifying Changes

Now that you have your devices isolated and the relevant features enabled, it's time to check that your machine is registering them properly.

grab iommu.sh from our companion repo, make it executable with chmod +x iommu.sh and run it with ./iommu.sh to see your iommu groups. No output means you didn't enable one of the relevant UEFI features, or didn't revise your kernel commandline options correctly. If the GPU and your other devices you want to pass to the host are in their own groups, move on to the next section. If not, refer to the troubleshooting section.

Run dmesg | grep vfio to ensure that your devices are being isolated. No output means that the vfio-pci module isn't loading and/or you did not enter the correct values in your kernel commandline options.

From here the process is straightforward. Start virt-manager (conversion from raw qemu covered in part one) and make sure the native resolution of both the config.plist and the OVMF options match each other and the display resolution you intend to use on the GPU. if you aren't sure, just use 1080p.

Click Add Hardware in the VM details, select PCI host device, select a device you've isolated with vfio-pci, and hit OK. Repeat for each device you want to pass through. Remove all spice and Qxl devices (including spice:channel), attach a monitor to the gpu and boot into the VM. Install 3d drivers, and you're ready to go. Note that you'll need to add your mouse and keyboard to the VM as usb devices, pass through a usb controller, or set up evdev to get input in the host at this point as well.

If all goes well, you just need to install drivers and you're ready to use 3d on your OSX VM.

Mac OS VM Networking

Fixing What Ain't Broke:

NAT is fine for most people, but if you use SMB shares or need to access a NAS or other networked device, it can make that difficult. You can switch your network device to macvtap, but that isolates your VM from the host machine, which can also present problems.

If you want access to other networked devices on your guest machine without stopping guest-host communication, you'll have to set up a bridged network for it. There are several ways to do this, but we'll be covering the methods that use NetworkManager, since that's the most common backend. If you use wicd or systemd-networkd, refer to documentation on those packages for bridge creation and configuration.

Via Network GUI:

This process can be done completely in the GUI on modern desktop environment by going to the network settings dialog, by adding a connection, selecting bridge as the type, adding your network interface as the slave device, and then activating the bridge (sometimes you need to restart network manager if the changes don't take effect immediately.) From there all you need to do is add the bridge as a network device in virt-manager.

[…]

The Unix operating system, the C programming language, and the many tools and techniques developed in this environment are finding extensive use within the Bell System and at universities, government laboratories, and other commercial installations. The style of computing encouraged by this environment is influencing a new generation of programmers and system designers. This, perhaps, is the most exciting part of the Unix story, for the increased productivity fostered by a friendly environment and quality tools is essential to meet every-increasing demands for software.

Today you can still run nearly any Unix program on macOS, but particularly with some of the security changes made in Catalina, you are liable to run into permissions issues, particularly when it comes to seamlessly linking programs together.

Mach: Mach was a microkernel developed at Carnegie Mellon University; the concept of a microkernel is to run the smallest amount of software necessary for the core functionality of an operating system in the most privileged mode, and put all other functionality into less privileged modes. OS X doesn't have a true microkernel — the BSD subsystem runs in the same privileged mode, for performance reasons — but the modular structure of a microkernel-type design makes it easier to port to different processor architectures, or remove operating system functionality that is not needed for different types of devices (there is, of course, lots of other work that goes into a porting a modern operating system; this is a dramatic simplification).

More generally, the spirit of a microkernel — a small centralized piece of software passing messages between different components — is how modern computers, particularly mobile devices, are architected: multiple specialized chips doing discrete tasks under the direction of an operating system organizing it all.

Xerox: The story of Steve Jobs' visiting Xerox is as mistaken as it is well-known; the Xerox Alto and its groundbreaking mouse-driven graphical user interface was well-known around Silicon Valley, thanks to the thousands of demos the Palo Alto Research Center (PARC) did and the papers it had published. PARC's problem is that Xerox cared more about making money from copy machines than in figuring out how to bring the Alto to market.

That doesn't change just how much of an inspiration the Alto was to Jobs in particular: after the visit he pushed the Lisa computer to have a graphical user interface, and it was why he took over the Macintosh project, determined to make an inexpensive computer that was far easier to use than anything that had come before it.

Apple: The Macintosh was not the first Apple computer: that was the Apple I, and then the iconic Apple II. What made the Apple II unique was its explicit focus on consumers, not businesses; interestingly, what made the Apple II successful was VisiCalc, the first spreadsheet application, which is to say that the Apple II sold primarily to businesses. Still, the truth is that Apple has been a consumer company from the very beginning.

This is why the Mac is best thought of as the child of Apple and Xerox: Apple understood consumers and wanted to sell products to them, and Xerox provided the inspiration for what those products should look like.

It was NeXTSTEP, meanwhile, that was the child of Unix and Mach: an extremely modular design, from its own architecture to its focus on object-oriented programming and its inclusion of different 'kits' that were easy to fit together to create new programs.

And so we arrive at OS X, the child of the classic Macintosh OS and NeXTSTEP. The best way to think about OS X is that it took the consumer focus and interface paradigms of the Macintosh and layered them on top of NeXTSTEP's technology. In other words, the Unix side of the family was the defining feature of OS X.

Return of the Mac

In 2005 Paul Graham wrote an essay entitled Return of the Mac explaining why it was that developers were returning to Apple for the first time since the 1980s:

All the best hackers I know are gradually switching to Macs. My friend Robert said his whole research group at MIT recently bought themselves Powerbooks. These guys are not the graphic designers and grandmas who were buying Macs at Apple's low point in the mid 1990s. They're about as hardcore OS hackers as you can get.

The reason, of course, is OS X. Powerbooks are beautifully designed and run FreeBSD. What more do you need to know?

https://downkfil664.weebly.com/blog/chess-has-exciting-spectacular-strategy-mac-os. Graham argued that hackers were a leading indicator, which is why he advised his dad to buy Apple stock:

If you want to know what ordinary people will be doing with computers in ten years, just walk around the CS department at a good university. Pink hibiscus mac os. Whatever they're doing, you'll be doing.

In the matter of 'platforms' this tendency is even more pronounced, because novel software originates with great hackers, and they tend to write it first for whatever computer they personally use. And software sells hardware. Many if not most of the initial sales of the Apple II came from people who bought one to run VisiCalc. And why did Bricklin and Frankston write VisiCalc for the Apple II? Because they personally liked it. They could have chosen any machine to make into a star.

If you want to attract hackers to write software that will sell your hardware, you have to make it something that they themselves use. It's not enough to make it 'open.' It has to be open and good. And open and good is what Macs are again, finally.

What is interesting is that Graham's stock call could not have been more prescient: Apple's stock closed at $5.15 on March 31, 2005, and $358.87 yesterday;1 the primary driver of that increase, though, was not the Mac, but rather the iPhone. Different slot machines.

The iOS Sibling

If one were to add iOS to the family tree I illustrated above, most would put it under Mac OS X; I think, though, iOS is best understood as another child of Classic Mac and NeXT, but this time the resemblance is to the Apple side of the family. Or to put it another way, while the Mac was the perfect machine for 'hackers', to use Graham's term, the iPhone was one of the purest expressions of Apple's focus on consumers.

The iPhone, as Steve Jobs declared at its unveiling in 2007, runs OS X, but it was certainly not Mac OS X: it ran the same XNU kernel, and most of the same subsystem (with some new additions to support things like cellular capability), but it had a completely new interface. That interface, notably, did not include a terminal; you could not run arbitrary Unix programs.2 That new interface, though, was far more accessible to regular users.

What is more notable is that the iPhone gave up parts of the Unix Philosophy as well: applications all ran in individual sandboxes, which meant that they could not access the data of other applications or of the operating system. This was great for security, and is the primary reason why iOS doesn't suffer from malware and apps that drag the entire system into a morass, but one certainly couldn't 'expect the output of every program to become the input to another'; until sharing extensions were added in iOS 8 programs couldn't share data with each other at all, and even now it is tightly regulated.

At the same time, the App Store made principle one — 'make each program do one thing well' — accessible to normal consumers. Whatever possible use case you could imagine for a computer that was always with you, well, 'There's an App for That':

Consumers didn't care that these apps couldn't talk to each other: they were simply happy they existed, and that they could download as many as they wanted without worrying about bad things happening to their phone — or to them. While sandboxing protected the operating system, the fact that every app was reviewed by Apple weeded out apps that didn't work, or worse, tried to scam end users.

This ended up being good for developers, at least from a business point-of-view: sure, the degree to which the iPhone was locked down grated on many, but Apple's approach created millions of new customers that never existed for the Mac; the fact it was closed and good was a benefit for everyone.

macOS 11.0

What is striking about macOS 11.0 is the degree to which is feels more like a son of iOS than the sibling that Mac OS X was:

- macOS 11.0 runs on ARM, just like iOS; in fact the Developer Transition Kit that Apple is making available to developers has the same A12Z chip as the iPad Pro.

- macOS 11.0 has a user interface overhaul that not only appears to be heavily inspired by iOS, but also seems geared for touch.

- macOS 11.0 attempts to acquire developers not primarily by being open and good, but by being easy and good enough.

The seeds for this last point were planted last year with Catalyst, which made it easier to port iPad apps to the Mac; with macOS 11.0, at least the version which will run on ARM, Apple isn't even requiring a recompile: iOS apps will simply run on macOS 11.0, and they will be in the Mac App Store by default (developers can opt-out).

In this way Apple is using their most powerful point of leverage — all of those iPhone consumers, which compel developers to build apps for the iPhone, Apple's rules notwithstanding — to address what the company perceives as a weakness: the paucity of apps in the Mac App Store.

Is the lack of Mac App Store apps really a weakness, though? When I consider the apps that I use regularly on the Mac, a huge number of them are not available in the Mac App Store, not because the developers are protesting Apple's 30% cut of sales, but simply because they would not work given the limitations Apple puts on apps in the Mac App Store.

The primary limitation, notably, is the same sandboxing technology that made iOS so trustworthy; that trustworthiness has always come with a cost, which is the ability to build tools that do things that 'lighten a task', to use the words from the Unix Philosophy, even if the means to do so opens the door to more nefarious ends.

Fortunately macOS 11.0 preserves its NeXTSTEP heritage: non-Mac App Store apps are still allowed, for better (new use cases constrained only by imagination and permissions dialogs) and worse (access to other apps and your files). What is notable is that this was even a concern: Apple's recent moves on iOS, particularly around requiring in-app purchase for SaaS apps, feel like a drift towards Xerox, a company that was so obsessed with making money it ignored that it was giving demos of the future to its competitors; one wondered if the obsession would filter down to the Mac.

For now the answer is no, and that is a reason for optimism: an open platform on top of the tremendous hardware innovation being driven by the iPhone sounds amazing. Moreover, one can argue (hope?) it is a more reliable driver of future growth than squeezing every last penny out of the greenfield created by the iPhone. At a minimum, leaving open the possibility of entirely new things leaves far more future optionality than drawing the strings every more tightly as on iOS. OS X's legacy lives, for now.

I wrote a follow-up to this article in this Daily Update.

- Yes, this incorporates Apple's 7:1 stock split [↩]

- Unless you jailbroke your phone [↩]

Related

We've made every attempt to make this as straightforward as possible, but there's a lot more ground to cover here than in the first part of the guide. If you find glaring errors or have suggestions to make the process easier, let us know on our discord.

With that out of the way, let's talk about what you need to get 3D acceleration up and running:

If you've arrived here without context, check out part one of the guide here.

General

- A Desktop. The vast majority of laptops are completely incompatible with passthrough on Mac OS.

- A working install from part 1 of this guide, set up to use virt-manager

- A motherboard that supports IOMMU (most AMD chipsets since 990FX, most mainstream and HEDT chipsets on Intel since Sandy Bridge)

- A CPU that fully supports virtualization extensions (most modern CPUs barring the odd exception, like the i7-4770K and i5-4670K. Haswell refresh K chips e.g. ‘4X90K' work fine.)

- 2 GPUs with different PCI device IDs. One of them can be an integrated GPU. Usually this means just 2 different models of GPU, but there are some exceptions, like the AMD HD 7970/R9 280X or the R9 290X and 390X. You can check here (or here for AMD) to confirm you have 2 different device IDs. You can work around this problem if you already have 2 of the same GPU, but it isn't ideal. If you plan on passing multiple USB controllers or NVMe devices it may also be necessary to check those with a tool like

lspci. - The guest GPU also needs to support UEFI boot. Check here to see if your model does.

- Recent versions of Qemu (3.0-4.0) and Libvirt.

AMD CPUs

- The most recent mainline linux kernel (all platforms)

- Bios prior to AGESA 0.0.7.2 or a patched kernel with the workaround applied (Ryzen)

- Most recent available bios (ThreadRipper)

- GPU isolation fixes applied, e.g. CSM toggle and/or efifb:off (Ryzen)

- ACS patch (lower end chipsets or highly populated pcie slots)

- A second discrete GPU (most AMD CPUs do not ship with an igpu)

Intel CPUs

- ACS patch (only needed if you have many expansion cards installed in most cases. Mainstream and budget chipsets only, HEDT unaffected.)

- A second discrete GPU (HEDT and F-sku CPUs only)

Nvidia GPUs

- A 700 Series Card. High Sierra works up to 10 series cards, but Mojave ends support for 9, 10, 20 and all future Nvidia GPUs. Cards older than the 700 series may not have UEFI support, making them incompatible.

- A google search to make sure your card is compatible with Mac OS on Macs/hackintoshes without patching or flashing.

AMD GPUs

- A UEFI compatible Card. AMD's refresh cycle makes this a bit more complicated to work out, but generally pitcairn chips and newer work fine — check your card's bios for 'UEFI Support' on techpowerup to confirm.

- A card without the Reset Bug (Anything older than Hawaii is bug free but it's a total crap-shoot on any newer card. 300 series cards may also have Mac OS specific compatibility issues. Vega and Fiji seem especially susceptible)

- A google search to make sure your card is compatible with Mac OS on Macs/hackintoshes without patching or flashing.

Getting Started with VFIO-PCI

Provided you have hardware that supports this process, it should be relatively straightforward.

First, you want to enable virtualization extensions and IOMMU in your uefi. The exact name and locations varies by vendor and motherboard. These features are usually titled something like 'virtualization support' 'VT-x' or 'SVM' — IOMMU is usually labelled 'VT-d' or 'AMD-Vi' if not just 'IOMMU support.'

Once you've enabled these features, you need to tell Linux to use them, as well as what PCI devices to reserve for your vm. The best way of going about this is changing your kernel commandline boot options, which you do by editing your bootloader's configuration files. We'll be covering Grub 2 here because it's the most common. Systemd-boot distributions like Pop!OS will have to do things differently.

run lspci -nnk | grep 'VGA|Audio' — this will output a list of installed devices relevant to your GPU. You can also just run lspci -nnk to get all attached devices, in case you want to pass through something else, like an NVMe drive or a usb controller. Look for the device ids for each device you intend to pass through, for example, my GTX 1070 is listed as [10de:1b81] and [10de:10f0] for the HDMI audio. You need to use every device ID associated with your device, and most GPUs have both an audio controller and VGA. Some cards, in particular VR-ready nvidia GPUs and the new 20 series GPUs will have more devices you'll need to pass, so refer to the full output to make sure you got all of them.

If two devices you intend to pass through have the same ID, you will have to use a workaround to make them functional. Check the troubleshooting section for more information.

Once you have the IDs of all the devices you intend to pass through taken down, it's time to edit your grub config:

It should look something like this:

In the line GRUB_CMDLINE_LINUX= add these arguments, separated by spaces: intel_iommu=on OR amd_iommu=on, iommu=pt and vfio-pci.ids= followed by the device IDs you want to use on the VM separated by commas. For example, if I wanted to pass through my GTX 1070, I'd add vfio-pci.ids=10de:1b81,10de:10f0. Save and exit your editor.

Run grub-mkconfig -o /boot/grub/grub.cfg. This path may be different for you on different distros, so make sure to check that this is the location of your grub.cfg prior to running this and change it as necessary. The tool to do this may also be different on certain distributions, e.g. update-grub.

Reboot.

Verifying Changes

Now that you have your devices isolated and the relevant features enabled, it's time to check that your machine is registering them properly.

grab iommu.sh from our companion repo, make it executable with chmod +x iommu.sh and run it with ./iommu.sh to see your iommu groups. No output means you didn't enable one of the relevant UEFI features, or didn't revise your kernel commandline options correctly. If the GPU and your other devices you want to pass to the host are in their own groups, move on to the next section. If not, refer to the troubleshooting section.

Run dmesg | grep vfio to ensure that your devices are being isolated. No output means that the vfio-pci module isn't loading and/or you did not enter the correct values in your kernel commandline options.

From here the process is straightforward. Start virt-manager (conversion from raw qemu covered in part one) and make sure the native resolution of both the config.plist and the OVMF options match each other and the display resolution you intend to use on the GPU. if you aren't sure, just use 1080p.

Click Add Hardware in the VM details, select PCI host device, select a device you've isolated with vfio-pci, and hit OK. Repeat for each device you want to pass through. Remove all spice and Qxl devices (including spice:channel), attach a monitor to the gpu and boot into the VM. Install 3d drivers, and you're ready to go. Note that you'll need to add your mouse and keyboard to the VM as usb devices, pass through a usb controller, or set up evdev to get input in the host at this point as well.

If all goes well, you just need to install drivers and you're ready to use 3d on your OSX VM.

Mac OS VM Networking

Fixing What Ain't Broke:

NAT is fine for most people, but if you use SMB shares or need to access a NAS or other networked device, it can make that difficult. You can switch your network device to macvtap, but that isolates your VM from the host machine, which can also present problems.

If you want access to other networked devices on your guest machine without stopping guest-host communication, you'll have to set up a bridged network for it. There are several ways to do this, but we'll be covering the methods that use NetworkManager, since that's the most common backend. If you use wicd or systemd-networkd, refer to documentation on those packages for bridge creation and configuration.

Via Network GUI:

This process can be done completely in the GUI on modern desktop environment by going to the network settings dialog, by adding a connection, selecting bridge as the type, adding your network interface as the slave device, and then activating the bridge (sometimes you need to restart network manager if the changes don't take effect immediately.) From there all you need to do is add the bridge as a network device in virt-manager.

Via NMCLI:

Not everyone uses a full desktop environment, but you can do this with nmcli as well:

Run ifconfig or nmcli to get the names of your devices, they'll be relevant in the next steps. take down or copy the name of the adapter you use to connect to the internet.

Next, run these commands, substituting the placeholders with the device name of the network adapter you want to bridge to the guest. They create a bridge device, connect the bridge to your network adapter, and create a tap for the guest system, respectively:

From here, remove the NAT device in virt-manager, and add a new network device with the connection set to br1: host device tap0. You may need to restart network manager for the device to activate properly.

NOTE: Wireless adapters may not work with this method. The ones that do need to support AP/Mesh Point mode and have multiple channels available. Check for these by running iw list. From there you can set up a virtual AP with hostapd and connect to that with the bridge. We won't be covering the details of this process here because it's very involved and requires a lot of prior knowledge about linux networking to set up correctly.

Other Options:

You can also pass through a PCIe NIC to the device if you happen to have a Mac OS compatible model laying around, and you're comfortable with adding kexts to clover. This is also the best way to get AirDrop working if you need it.

If all else fails, you can manually specify routes between the host and guest using macvtap and ip, or set up a macvlan. Both are complex and require networking knowledge.

Input Tweaks

Emulated input might be laggy, or give you problems with certain input combinations. This can be fixed using several methods.

Attach HIDs as USB Host devices

This method is the easiest, but has a few drawbacks. Chiefly, you can't switch your keyboard and mouse back to the host system if the VM crashes. It may also need to be adjusted if you change where your devices are plugged in on the host. Just click the add hardware button, select usb host device, and then select your keyboard and mouse. When you start your VM, the devices will be handed off.

Use Evdev

This method uses a technique that allows both good performance and switchable inputs. We have a guide on how to set it up here. Note that because OS X does not support PS2 Input out of the box, you need to replace your ps/2 devices as follows in your xml:

If you can't get usb devices working for whatever reason (usually due to an outdated qemu version) you can add the VoodooPS2 Kext to your ESP to enable ps2 input. This may limit compatibility with new releases, so make sure to check that you have an alternative before committing.

Use Barrier/Synergy

Synergy and barrier are networked input packages that allow you to control your host and/or guest on the same machine, or remotely. They offer convenient input, but will not work with certain networking configurations. Synergy is paid software, but Barrier is free, and isn't hard to set up. with one caveat on MacOS. You either want to stick with a version prior to 1.3.6 or install the binary manually like so:

After that, just follow a synergy configuration guide (barrier is just an open source fork of synergy) to set up your merged input. It's usually as simple as opening the app, setting one as server, entering the network address of the other, and then arranging the virtual merged screens accordingly. Note that if you experience bad performance on your guest with synergy/barrier, you can make the guest the server and pass usb devices as described above, but this will make your input devices unavailable on the host if the VM crashes.

Use a USB Controller and Hardware KVM Switch

Probably the most elegant solution. You need $20-60 in hardware to do it, but it allows switching your inputs without prior configuration or problems if the guest VM crashes. Simply isolate and pass through a usb controller (as you would a gpu in the section above) and plug a usb kvm switch into a port on that controller as well as a usb controller on the host. Plug your keyboard and mouse into the kvm switch, and press the button to switch your inputs from one to the other.

Some USB3 controllers are temperamental and don't like being passed through, so stick to usb2 or experiment with the ones you have. Typically newer Asmedia and Intel ones work best. If your built-in USB controller has issues it may still be possible to get it working using a 3rd party script, but this will heavily depend on how your kvm switch operates as well. Your best bet is just to buy a PCIe controller if the one you have doesn't work.

Audio

By default, audio quality isn't the best on OS X guest VMs. There are a few ways around it, but we suggest a hardware-based approach for the best reliability.

Hardware-Based Audio Passthrough

This method is fairly simple. Just buy a class compliant USB audio interface advertised as working in Mac OS, and plug it into a USB controller that you've passed through to the VM as described in the KVM switch section. If you need seamless audio between host and guest systems, we have a guide on how to get that working as well. We regard this option as the best solution if you plan on using both the host and guest system regularly.

HDMI Audio Extraction

If you're already passing through a GPU, you can just use that as your audio output for the VM. Just use your monitor's line out, or grab an audio extractor as described in the linked article above.

Pulseaudio/ALSA passthrough

You can pass through your VM audio via the ich9 device to your host systems' audio server. We have a guide that goes into detail on this process here.

CoreAudio to Jack

CoreAudio supports sending system sound through Jack, a versatile and powerful unix sound system. Jack supports networking, so it's possible to connect the guest to the host over the network via Jack. Because Jack is fairly complex and this method requires a specific network setup to get it working, we'll be saving the specifics of it for a future article. On Linux host systems, tools like Carla can make initial Jack setup easier.

Quality of Life

If you find yourself doing a lot of workarounds or want to customize things even further, these are some tools and resources that can make your life easier.

Clover Configurator

This is a tool that automates some aspects of managing clover and your ESP configuration. It can make things like adding kexts and defining hardware details (needed to get iMessage and other things working) easier. It may change your config.plist in a way that reduces compatibility, so be careful if you elect to use it.

InsanelyMac and AMD-OSX

Forums where people discuss hackintosh installation and maintenance. Many things that work in baremetal hackintoshes will work in a VM, so if you're looking for tweaks that are only relevant to your software configuration, this is a good place to start.

Troubleshooting

As always, first steps when running into issues should be to read through dmesg output on the host after starting the VM and searching for common problems.

No output after passing through my GPU

Make sure you have a compatible version of Mac OS, most Nvidia cards will only work on High Sierra and earlier, and 20 series cards will not work at all. Make sure you don't have spice or QXL devices attached, and follow the steps in the verification section to make sure that your vfio-pci configuration works. If it doesn't you may have to load the driver manually, but this isn't the case on most modern linux distributions.

Make sure that your config.plist and OVMF resolution match your monitor's native resolution. How to edit these settings is covered in Part 1.

If all else fails, you can try passing a vbios to the card by downloading the relevant files from techpowerup and adding the path to them in your XML, usually something like in the pci device section that corresponds to the GPU. Online cad drawing 2d.

Can't Connect to SMB shares or see other networked devices

Change to a different networking setup as described in the networking section

iMessage/AirDrop/Apple Services not working

You have to configure these just like any other hackintosh. Consult online guides for procedure specifics.

Multiple PCI devices in the same IOMMU group

You need to install the ACS patch. Arch, fedora and Ubuntu all have prepatched kernel repos. Systemd-boot based ubuntu distributions like Pop!OS will need further work to get an installed kernel working. Refer to your distro documentation for exact procedure needed to switch or patch kernels otherwise. You'll also need to add

2 identical PCI IDs

You're going to have to add a script that isolates only 1 card early in the boot process. There's several ways to do this, and our method may not work for you, but this is the methodology we suggest:

open up a text editor as root and and copy/paste this script:

Save it as /usr/bin/vfioverride.sh.

from there run these commands as root:

On Arch, as root, make a new file called pci-isolate.conf in /etc/modprobe.d, open it in an editor and add the line install/usr/bin/init-top/vfioverride.sh to it. Save it. Make sure modconf is listed in the HOOKS=( array section of your initrd config file, mkinitcpio.conf.

If you're on fedora or RHEL, you can simply add the install line to install_items+= array and modconf/vfio-pci to the add_drivers+= array.

And update your initial ramdisk using mkinitcpio, dracut, or update-initramfs depending on your distribution (Arch, RHEL/Fedora and *Buntu respectively.)

NOTE: script installation methodology varies from distro to distro. You may have to add initramfs hooks for the script to take effect, or force graphics drivers to load later to prevent the card from being captured before it can be isolated. refer to the Arch Wiki article for a different installation methodology if this one fails. You may also have to add the vfio-pci modules to initramfs hooks if your kernel doesn't load the vfio-pci module automatically.

Reboot and verify your devices are isolated by checking lspci for them (if they're missing you're good to go.)

If not, set vfio-pci to load early with hooks and try again. If it still doesn't work, you may need to compile a kernel that does not load the module and follow the archwiki guide on traditional setup.

The best preventative measure for this problem is to buy different cards in the first place.

I did everything instructed but the GPU still won't isolate/VM crashes or hardlocks system on startup

Your Graphics drivers are probably set to load earlier in the boot process than vfio-pci. You can fix this one of 2 ways:

- blacklisting the graphics driver early

- tell your initial ramdisk to load vfio-pci earlier than your graphics drivers

The first option can be achieved by adding amdgpu,radeon or nouveau to module_blacklist= in your kernel command line options (same way you added vfio device IDs in the first section of this tutorial.)

Consume (tobysmithgamedev) Mac Os X

The second is done by adding vfio_pci vfio vfio_iommu_type1 vfio_virqfdto your initramfs early modules list, and removing any graphics drivers set to load at the same time. This process varies depending on your distro.

Onmkinitcpio systems (Arch,) you add these to the MODULES=section of /etc/mkinitcpio.conf and then rebuild your initramfs by running mkinitcpio -P.

Stickman in peril mac os. On dracut systems (Fedora, RHEL, Centos, Arch in future releases,) you add these to a .conf file in the /etc/modules-load.d/ folder.

Images Courtesy Foxlet, Pixabay

What Version Of MacOS Can My Mac Run? - Macworld UK

Consider Supporting us on Patreon if you like our work, and if you need help or have questions about any of our articles, you can find us on our Discord. We provide RSS feeds as well as regular updates on Twitter if you want to be the first to know about the next part in this series or other projects we're working on.